Copyright © 2013 Richard Dallaway. All rights reserved.

Printed in the United States of America.

Published by O'Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472.

O'Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://safaribooksonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or corporate@oreilly.com.

See http://oreilly.com/catalog/errata.csp?isbn=9781449362683 for release details.

The O'Reilly logo is a registered trademark of O'Reilly Media, Inc. Lift Cookbook, the cover image, and related trade dress are trademarks of O'Reilly Media, Inc.

While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. Use of the information and instructions contained in this work is at your own risk. If any code samples or other technology this work contains or describes is subject to open source licenses or the intellectual property rights of others, it is your responsibility to ensure that your use thereof complies with such licenses and/or rights.

978-1-449-36268-3

[LSI]

This is a collection of solutions to questions you might have while developing web applications with the Lift Web Framework.

The aim is to give a single, short answer to a specific question. When there are multiple approaches, or styles, we’ll give you one solution, but will point you at alternatives in the discussion.

Installing and Running Lift will get you up and running with Lift, but in other respects, this cookbook is aimed at practitioners and the questions they have asked. If this is the first time you’ve heard of Lift, you’ll want to look at:

Torsten Uhlmann’s Instant Lift Web Applications How-to (PACKT Publishing)

Timothy Perrett’s Lift in Action (Manning Publications)

I’ve mined the Lift mailing list for these recipes, but I’m not the only one. Recipes have been contributed by:

Jono Ferguson, who’s a Scala consultant based in Sydney, Australia. He can be found lurking on the Lift mailing list and occasionally helps out with Lift modules. Find him at https://twitter.com/jonoabroad and http://underscoreconsulting.com.

Franz Bettag, who’s been an enthusiastic Scala hacker for several years now. He joined the Lift team in January 2012 and actively tweets and blogs about his newest Scala adventures. Find him at https://twitter.com/fbettag.

Marek Żebrowski.

Peter Robinett, who’s a web and mobile developer and a Lift committer. He can be found on the Web and on Twitter.

Kevin Lau, who’s a founder of a few web apps with a focus in AWS cloud, iOS, and Lift.

Tony Kay.

Chenguang He.

You should join them: How to Add a New Recipe to This Cookbook tells you how.

The text of this cookbook is at https://github.com/d6y/lift-cookbook.

You’ll find projects for each chapter on GitHub.

Follow @LiftCookbook on Twitter.

Except where otherwise indicated, the examples use Lift 2.6 with SBT 0.13 and Scala 2.11.

The following typographical conventions are used in this book:

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant widthUsed for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Constant width boldShows commands or other text that should be typed literally by the user.

Constant width italicShows text that should be replaced with user-supplied values or by values determined by context.

This icon signifies a tip, suggestion, or general note.

This icon indicates a warning or caution.

This book is here to help you get your job done. In general, if this book includes code examples, you may use the code in this book in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing a CD-ROM of examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Lift Cookbook by Richard Dallaway (O’Reilly). Copyright 2013 Richard Dallaway, 978-1-449-36268-3.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

Safari Books Online is an on-demand digital library that delivers expert content in both book and video form from the world’s leading authors in technology and business.

Technology professionals, software developers, web designers, and business and creative professionals use Safari Books Online as their primary resource for research, problem solving, learning, and certification training.

Safari Books Online offers a range of plans and pricing for enterprise, government, education, and individuals.

Members have access to thousands of books, training videos, and prepublication manuscripts in one fully searchable database from publishers like O’Reilly Media, Prentice Hall Professional, Addison-Wesley Professional, Microsoft Press, Sams, Que, Peachpit Press, Focal Press, Cisco Press, John Wiley & Sons, Syngress, Morgan Kaufmann, IBM Redbooks, Packt, Adobe Press, FT Press, Apress, Manning, New Riders, McGraw-Hill, Jones & Bartlett, Course Technology, and hundreds more. For more information about Safari Books Online, please visit us online.

Please address comments and questions concerning this book to the publisher:

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at http://oreil.ly/lift-cookbook.

To comment or ask technical questions about this book, send email to bookquestions@oreilly.com.

For more information about our books, courses, conferences, and news, see our website at http://www.oreilly.com.

Find us on Facebook: http://facebook.com/oreilly

Follow us on Twitter: http://twitter.com/oreillymedia

Watch us on YouTube: http://www.youtube.com/oreillymedia

These recipes exist because of the many contributions on the Lift mailing list, where Liftafarians, as they are known, generously give their time to ask questions, put together example projects, give answers, share alternatives, and chip in with comments. Thank you.

I am indebted to the contributors who have taken the trouble to write new recipes, and to those who have provided corrections and suggestions.

You’ll see I’ve repeatedly referenced the work of Antonio Salazar Cardozo, Diego Medina, Tim Nelson, David Pollak, and Dave Whittaker. These are fantastic communicators: thank you guys.

It’s been a pleasure working with the O’Reilly team, and they have immensely improved the text. Thank you, especially Meghan Blanchette, Kara Ebrahim, and Kiel Van Horn.

Many thanks to Jono for all the encouragement and help.

To Jane, Mum, Dad: thank you. It’s amazing what you can do with a supportive family.

This chapter covers questions regarding starting development with Lift: running a first Lift application and setting up a coding environment. You’ll find answers regarding production deployment in Production Deployment.

The only prerequisite for installing and running Lift is to have Java 1.7 or later installed. Instructions for installing Java can be found at http://java.com/.

You can find out if you have Java from the shell or command prompt by asking for the version you have installed:

$ java -version java version "1.7.0_13" Java(TM) SE Runtime Environment (build 1.7.0_13-b20) Java HotSpot(TM) 64-Bit Server VM (build 23.7-b01, mixed mode)

Once you have Java, the following instructions will download, build, and start a basic Lift application.

Visit http://liftweb.net/download and download the most recent Lift 2.6 ZIP file.

Unzip the file.

Start Terminal or your favourite shell tool.

Navigate into the unzipped folder and into the scala_211 subfolder and then into the lift_basic folder.

Run ./sbt.

Required libraries will be downloaded automatically.

At the SBT prompt (>), type container:start.

Open your browser and go to http://127.0.0.1:8080/.

When you’re done, type exit at the SBT prompt to stop your application from running.

Visit http://liftweb.net/download and locate the link to the most recent ZIP version of Lift 2.6 and save this to disk.

Extract the contents of the ZIP file.

Navigate in Explorer to the extracted folder, and once inside, navigate into scala_211 and then lift_basic.

Double-click sbt.bat to run the build tool; a Terminal window should open.

Some versions of windows will display a warning that the SBT "publisher could not be verified". Click "Run" if this happens.

Required libraries will be downloaded automatically.

At the SBT prompt (>), type container:start.

You may find Windows Firewall blocking Java from running. If so, opt to "allow access."

Start your browser and go to http://127.0.0.1:8080/.

When you’re done, type exit at the SBT prompt to stop your application from running.

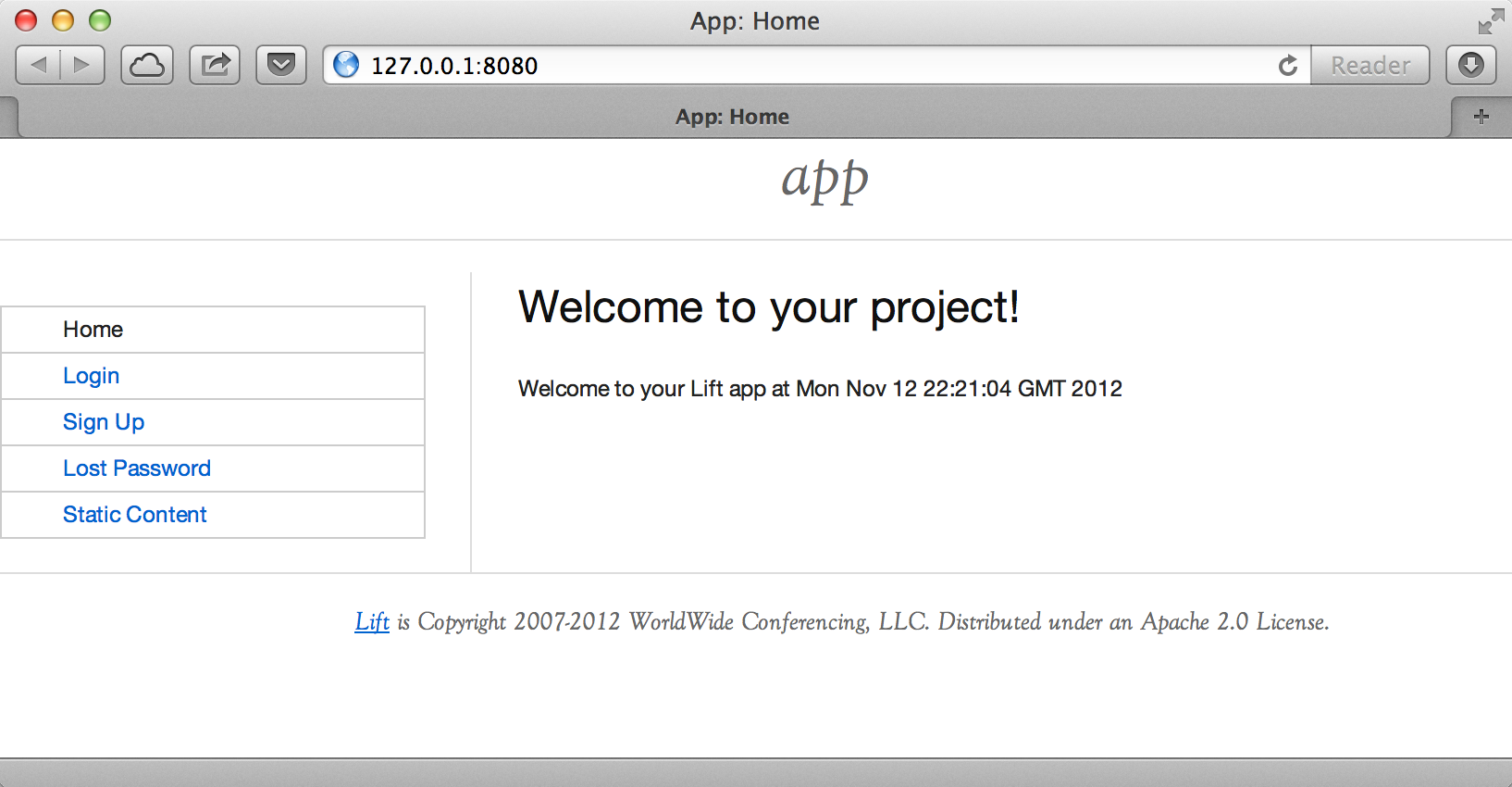

The result of these commands should be a basic Lift application running on your computer, as shown in Figure 1-1.

Lift isn’t installed in the usual sense of "installing software." Instead, you use standard build tools, such as SBT or Maven, to assemble your application with the Lift framework. In this recipe, we downloaded a ZIP file containing four fairly minimal Lift applications, and then started one of them via the build tool.

Typing sbt starts a Simple Build Tool used by Scala projects (it’s not specific to Lift). SBT will check the project definition and download any libraries required, which will include the Lift framework.

This download happens once, and the downloaded files are stored on disk in .ivy2 under your home folder.

Your application build is configured by build.sbt. Looking inside, you’ll see:

Basic information about your application, including a name and version

Resolvers, which inform SBT where to fetch dependencies from

Settings for plugins and the Scala compiler

A list of dependencies required to run your application, which will include the Lift framework

The SBT command container:start starts the web server on the default port of 8080 and

passes requests to your Lift application. The word container refers to the

software you deploy your application into. There are a variety of containers (Jetty and

Tomcat are probably the best known) all of which conform to a standard for deployment.

The upshot is you can build your application and deploy to whichever one you prefer.

The container:start command uses Jetty.

The source code of the application resides in src/main/webapp and src/main/scala. If you take a look at index.html in the webapp folder, you’ll see mention of data-lift="helloWorld.howdy". That’s a reference to the class defined in scala/code/snippet/HelloWorld.scala. This is a snippet invocation and an example of Lift’s view first approach to web applications. That is, there’s no routing set up for the index page to collect the data and forward it to the view. Instead, the view defines areas of the content that are replaced with functions, such as those functions defined in HelloWorld.scala.

Lift knows to look in the code package for snippets, because that package is declared as a location for snippets in scala/bootstrap/liftweb/Boot.scala. The Boot class is run when starting your application, and it’s where you can configure the behaviour of Lift.

The Simple Build Tool documentation is at http://www.scala-sbt.org.

Tutorials for Lift can be found in Simply Lift, Instant Lift Web Applications How-to (PACKT Publishing), and in Lift in Action (Manning Publications Co.).

You want to create a Lift web project from scratch without using the ZIP files provided on the official Lift website.

You will need to configure SBT and the Lift project yourself. Luckily, only five small files are needed.

First, create an SBT plugin file at project/plugins.sbt (all filenames are given relative to the project root directory):

addSbtPlugin("com.earldouglas"%"xsbt-web-plugin"%"0.7.0")

This file tells SBT that you will be using the xsbt-web-plugin. This plugin will allow you to start and stop your application.

Next, create an SBT build file, build.sbt:

organization:="org.yourorganization"name:="liftfromscratch"version:="0.1-SNAPSHOT"scalaVersion:="2.11.1"seq(webSettings:_*)libraryDependencies++={valliftVersion="2.6-RC1"Seq("net.liftweb"%%"lift-webkit"%liftVersion%"compile","org.eclipse.jetty"%"jetty-webapp"%"8.1.7.v20120910"%"container,test","org.eclipse.jetty.orbit"%"javax.servlet"%"3.0.0.v201112011016"%"container,compile"artifactsArtifact("javax.servlet","jar","jar"))}

Feel free to change the various versions, though be aware that certain versions of Lift are only built for certain versions of Scala.

Now that you have the basics of an SBT project, you can launch the sbt console. It should load all the necessary dependencies, including the proper Scala version, and bring you to a prompt.

Next, create the following file at src/main/webapp/WEB-INF/web.xml:

<!DOCTYPE web-app SYSTEM "http://java.sun.com/dtd/web-app_2_3.dtd"><web-app><filter><filter-name>LiftFilter</filter-name><display-name>Lift Filter</display-name><description>The Filter that intercepts Lift calls</description><filter-class>net.liftweb.http.LiftFilter</filter-class></filter><filter-mapping><filter-name>LiftFilter</filter-name><url-pattern>/*</url-pattern></filter-mapping></web-app>

The web.xml file tells web containers, such as Jetty as configured by xsbt-web-plugin, to pass all requests on to Lift.

Next, create a sample index.html file at src/main/webapp/index.html for our Lift app to load. For example:

<!DOCTYPE html><html><head><title>Lift From Scratch</title></head><body><h1>Welcome, you now have a working Lift installation</h1></body></html>

Finally, set up the basic Lift boot settings by creating a Boot.scala file at src/main/scala/bootstrap/Boot.scala. The following contents will be sufficient:

packagebootstrap.liftwebimportnet.liftweb.http.{Html5Properties,LiftRules,Req}importnet.liftweb.sitemap.{Menu,SiteMap}/*** A class that's instantiated early and run. It allows the application* to modify lift's environment*/classBoot{defboot{// where to search snippetLiftRules.addToPackages("org.yourorganization.liftfromscratch")// Build SiteMapdefsitemap():SiteMap=SiteMap(Menu.i("Home")/"index")// Use HTML5 for renderingLiftRules.htmlProperties.default.set((r:Req)=>newHtml5Properties(r.userAgent))}}

Congratulations, you now have a working Lift project!

You can verify that you have a working Lift project by launching the Jetty web container from the SBT console with the container:start command. First, the Boot.scala file should be compiled and then you should be notified that Jetty has launched and is listening at http://localhost:8080. You should be able to go to the address in your web browser and see the rendered index.html file you created earlier.

As shown previously, creating a Lift project from scratch is a relatively simple process. However, it can be a tricky one for newcomers, especially if you are not used to the Java Virtual Machine (JVM) ecosystem and its conventions for web containers. If you run into problems, make sure the files are in the correct locations and that their contents were not mistakenly modified. If all else fails, refer to the sample project next or ask for help on the Lift mailing list.

Lift projects using SBT or similar build tools follow a standard project layout, where Scala source code is in src/main/scala and web resources are in src/main/webapp. Your Scala files must be placed either directly at src/main/scala or in nested directories matching the organization and name you defined in build.sbt, in our case giving us src/main/scala/org/yourorganization/liftfromscratch/. Test files match the directory structure but are placed in src/test/ instead of src/main/. Likewise, the web.xml file must be placed in src/main/webapp/WEB-INF/ for it to be properly detected.

Given these conventions, you should have a directory structure looking quite, if not exactly, like this:

- project root directory

| build.sbt

- project/

| plugins.sbt

- src/

- main/

- scala/

- bootstrap/

| Boot.scala

- org/

- yourorganization/

- liftfromscratch/

| <your Scala code goes here>

- webapp/

| index.html

| <any other web resources - images, HTML, JavaScript, etc - go here>

- WEB-INF/

| web.xml

- test/

- scala/

- org/

- yourorganization/

- liftfromscratch/

| <your tests go here>

There is a sample project created using this method.

You want to develop your Lift application using your favourite text editor, hitting reload in your browser to see changes.

Run SBT while you are editing, and ask it to detect and compile changes to Scala files. To do that, start sbt and enter the following to the SBT prompt:

~; container:start; container:reload /

When you save a source file in your editor, SBT will detect this change, compile the file, and reload the web container.

An SBT command prefixed with ~ makes that command run when files

change. The first semicolon introduces a sequence of commands, where if

the first command succeeds, the second will run. The second semicolon

means the reload command will run if the start command ran OK. The start

command will recompile any Scala source files that have changed.

When you run SBT in this way, you’ll notice the following output:

1. Waiting for source changes... (press enter to interrupt)

And indeed, if you do press Enter in the SBT window, you’ll exit this triggered execution mode and SBT will no longer be looking for file changes. However, while SBT is watching for changes, the output will indicate when this happens with something that looks a little like this:

[info] Compiling 1 Scala source to target/scala-2.11/classes...

[success] Total time: 1 s, completed 26-Aug-2014 17:17:47

[pool-301-thread-4] DEBUG net.liftweb.http.LiftServlet - Destroyed Lift handler.

[info] stopped o.e.j.w.WebAppContext{/,[src/main/webapp/]}

[info] NO JSP Support for /, did not find org.apache.jasper.servlet.JspServlet

[info] started o.e.j.w.WebAppContext{/,[src/main/webapp/]}

[success] Total time: 0 s, completed 26-Aug-2014 17:17:48

2. Waiting for source changes... (press enter to interrupt)

Edits to HTML files don’t trigger the SBT compile and reload commands. This is because SBT’s default behaviour is to look for Scala and Java source file changes, and also changes to files in src/main/resources/. This works out just fine, because Jetty will use your modified HTML file when you reload the browser page.

Restarting the web container each time you edit a Scala file isn’t ideal. You can reduce the need for restarts by integrating JRebel into your development environment, as described in Incorporating JRebel.

However, if you are making a serious number of edits, you may prefer to issue a container:stop command until you’re ready to run you application again with container:start. This will prevent SBT compiling and restarting your application over and over. The SBT console has a command history, and using the up and down keyboard arrows allows you to navigate to previous commands and run them by pressing the Return key. That takes some of the tedium out of these long commands.

If you are using Java before Java 8, one error you may run into is:

java.lang.OutOfMemoryError: PermGen space

The permanent generation is a Java Virtual Machine concept. It’s the area of memory used for storing classes (amongst other things). It’s a fixed size, and once it is full, this PermGen error appears. As you might imagine, continually restarting a container causes many classes to be loaded and unloaded, but the process is not perfect, effectively leaking memory. The best you can do is stop and then restart SBT. If you’re seeing this error often, check the setting for -XX:MaxPermSize inside the sbt (or sbt.bat) script, and if you can, double it.

Triggered execution has a number of settings you can adjust, as described in the SBT documentation.

See the SBT Command Line Reference for an overview of available commands.

Commands and configuration options for the SBT web plugin are described on the GitHub wiki.

You want to avoid application restarts when you change a Scala source file by using JRebel.

There are three steps required: install JRebel once; each year, request the free Scala license; and configure SBT to use JRebel.

First, visit https://my.jrebel.com/plans and request the free Scala license.

Second, download the "Generic ZIP Archive" version of JRebel, unzip it to where you like. For this recipe, I’ve chosen to use /opt/zt/jrebel/.

When you have received your account confirmation email from JRebel, you can copy your "authentication token" from the "Active" area of ZeroTurnaround’s site. To apply the token to your local install, run the JRebel configuration script:

$ /opt/zt/jrebel/bin/jrebel-config.sh

For Windows, navigate to and launch bin\jrebel-config.cmd.

In the "Activation" setting, select "I want to use myJRebel" and then in the "License" section, paste in your activation token. Click the "Activate" button, and once you see the license status change to "You have a valid myJRebel token," click "Finish."

Finally, configure SBT by modifying the sbt script to enable JRebel. This means setting the -javaagent and -noverify flags for Java, and enabling the JRebel Lift plugin.

For Mac and Linux, the script that’s included with the Lift downloads would become:

java -Drebel.lift_plugin=true -noverify -javaagent:/opt/zt/jrebel/jrebel.jar \ -Xmx1024M -Xss2M -XX:MaxPermSize=512m -XX:+CMSClassUnloadingEnabled -jar \ `dirname $0`/sbt-launch-0.12.jar "$@"

For Windows, modify sbt.bat to be:

set SCRIPT_DIR=%~dp0 java -Drebel.lift_plugin=true -noverify -javaagent:c:/opt/zt/jrebel/jrebel.jar \ -XX:+CMSClassUnloadingEnabled -XX:MaxPermSize=256m -Xmx1024M -Xss2M \ -jar "%SCRIPT_DIR%\sbt-launch-0.12.jar" %*

There’s nothing else to do to use JRebel. When you start SBT, you’ll see a large banner stating something like this:

############################################################# JRebel 5.1.1 (201211271929) (c) Copyright ZeroTurnaround OU, Estonia, Tartu. Over the last 30 days JRebel prevented at least 335 redeploys/restarts saving you about 13.6 hours. ....

With JRebel installed, you can now container:start your application, modify and compile a Scala file, and reload a page in your application. You’ll see a notice that the class has been reloaded:

[2012-12-16 23:15:44] JRebel: Reloading class 'code.snippet.HelloWorld'.

That change is live, without having to restart the container.

JRebel is very likely to speed up your development. It updates code in a running Java Virtual Machine, without having to stop and restart it. The effect is that, on the whole, you can compile a class, then hit reload in your browser to see the change in your Lift application.

Even with JRebel you will need to restart your applications from time to time, but JRebel usually reduces the number of restarts. For example, Boot.scala is run when your application starts, so if you modify something in your Boot.scala, you’ll need to stop and start your application. JRebel can’t help with that.

But there are also other situations that JRebel cannot help with, such as when a superclass changes. Generally, JRebel will emit a warning about this in the console window. If that happens, stop and start your application.

The -Drebel.lift_plugin=true setting adds Lift-specific functionality to JRebel. Specifically, it allows JRebel to reload changes to LiftScreen, Wizard, and RestHelper. This means you can change fields or screens, and change REST serve code.

This recipe uses a free Scala license for a service called myJRebel. This communicates with JRebel servers via the activation code. If you have purchased a license from ZeroTurnaround, the situation is slightly different. In this case, you will have a license key that you store in a file called jrebel.lic. You can place the file in a .jrebel folder in your home directory, or alongside jrebel.jar (e.g., in the /opt/zt/jrebel/ folder, if that’s where you installed JRebel), or you can specify some other location. For the latter option, modify the sbt script and specify the location of the file by adding another Java setting:

-Drebel.license=/path/to/jrebel.lic

Details about how JRebel works can be found in the ZeroTurnaround FAQ.

The Lift support was announced in a blog post in 2012, where you’ll find more about the capabilities of the plugin.

You want to develop your Lift application using the Eclipse IDE, hitting reload in your browser to see changes.

Use the "Scala IDE for Eclipse" plugin to Eclipse, and the sbteclipse plugin for SBT. This will give Eclipse the ability to understand Scala, and SBT the ability to create project files that Eclipse can load.

The instructions for the Eclipse plugin are given at http://scala-ide.org. There are a number of options to be aware of when picking an update site to use: there are different sites for Scala 2.9 and 2.10, and for different versions of Eclipse. Start with the stable version of the plugin rather than a nightly or milestone version. This will give you an Eclipse perspective that knows about Scala.

Once the Eclipse plugin is installed and restarted, you need to create the project files to allow Eclipse to load your Lift project. Install sbteclipse by adding the following to projects/plugins.sbt in your Lift project:

addSbtPlugin("com.typesafe.sbteclipse"%"sbteclipse-plugin"%"2.5.0")

You can then create Eclipse project files (.project and .classpath) by entering the following into the SBT prompt:

eclipse

Open the project in Eclipse by navigating to "File → Import…" and selecting "General → Existing Projects into Workspace." Browse to and choose your Lift project. You are now set up to develop your application in Eclipse.

To see live changes as you edit and save your work, run SBT in a separate terminal window. That is, start sbt from a terminal window outside of Eclipse and enter the following:

~; container:start; container:reload /

The behaviour of this command is described in Developing Using a Text Editor, but if you’re using JRebel (see Incorporating JRebel), then you just need to run container:start by itself.

You can then edit in Eclipse, save to compile, and in your web browser, hit reload to see the changes.

One of the great benefits of an IDE is the ability to navigate source, by Cmd+click (Mac) or F3 (PC).

You can ask the SBT eclipse command to download the Lift

source and Scaladoc, allowing you to click through to the Lift source from

methods and classes, which is a useful way to discover more about Lift.

To achieve this in a project, run eclipse with-source=true in SBT, but if you want

this to be the default behaviour, add the following to your build.sbt file:

EclipseKeys.withSource:=true

If you find yourself using the plugin frequently, you may wish to declare it in your global SBT configuration files so it applies to all projects. To do that, create a ~/.sbt/0.13/plugins/build.sbt file containing:

resolvers+=Classpaths.typesafeResolveraddSbtPlugin("com.typesafe.sbteclipse"%"sbteclipse-plugin"%"2.5.0")

Note the blank line between the resolvers and the addSbtPlugin. In .sbt files, a blank line is required between statements.

Finally, set any global configurations (such as withSource) in ~/.sbt/0.13/global.sbt.

There are other useful settings for sbteclipse. You’ll also find the latest version number for the plugin on that site.

The SBT ~/.sbt/ structure is described in the guide to using plugins and in the wiki page for global configuration.

You want to use the IntelliJ IDEA development environment when writing your Lift application.

You need the Scala plugin for IntelliJ, and an SBT plugin to generate the IDEA project files.

The IntelliJ plugin you’ll need to install only once, and these instructions are for IntelliJ IDEA 13. The details may vary between releases of the IDE, but the basic idea is to find the JetBrains Scala plugin, and download and install it.

From the "Welcome to IntelliJ IDEA" or "Quick Staert" screen, select "Configure" and then "Plugins." Select "Browse repositories…" In the search box, top right, type "Scala." You’ll find on the left a number of matches: select "Scala." On the right, you’ll see confirmation that this is the "Plugin for Scala language support" and the vendor is JetBrains, Inc. Click the "Install Plugin" button, confirm you want to install, then "Close" the repository browser, and finally OK out of the plugins window. You’ll be prompted to restart IntelliJ IDEA.

With the IDE configured, you now need to include the SBT plugin inside your Lift project by adding the following to the file projects/plugins.sbt:

addSbtPlugin("com.github.mpeltonen"%"sbt-idea"%"1.6.0")

Start SBT, and at the SBT prompt, create the IDEA project files by typing:

gen-idea

This will generate the .idea and .iml files that IntelliJ uses. Inside IntelliJ you can open the project from the "File" menu, picking "Open…" and then navigating to your project and selecting the directory.

To see live changes as you edit and save your work, run SBT in a separate Terminal window. That is, start sbt from a Terminal window outside of IntelliJ and enter the following:

~; container:start; container:reload /

This behaviour of this command is described in Developing Using a Text Editor, but if you’re using JRebel (see Incorporating JRebel), then you just need to run container:start by itself.

Each time you compile or make the project, the container will pick up the changes, and you can see them by reloading your browser window.

By default, the gen-idea command will fetch source for dependent libraries. That means out of the box you can click through to Lift source code to explore it and learn more about the framework.

If you want to try the latest snapshot version of the plugin, you’ll need to include the snapshot repository in your plugin.sbt file:

resolvers+="Sonatype snapshots"at"http://oss.sonatype.org/content/repositories/snapshots/"

Setting up the SBT IDEA plugin globally, for all SBT projects, is the same pattern as described for Eclipse in Developing Using Eclipse.

The sbt-idea plugin doesn’t have a configuration guide yet. One way to discover the features is to browse the release notes in the notes folder of that project.

JetBrains has a blog for the Scala plugin with feature news and tips.

You’re developing using the default lift_proto.db H2 database, and you would like to use a tool to look at the tables.

Use the web interface included as part of H2. Here are the steps:

Locate the H2 JAR file. For me, this was: ~/.ivy2/cache/com.h2database/h2/jars/h2-1.2.147.jar.

Start the server from a Terminal window using the JAR file: java -cp /path/to/h2-version.jar org.h2.tools.Server.

This should launch your web browser, asking you to log in.

Select "Generic H2 Server" in "Saved Settings."

Enter jdbc:h2:/path/to/youapp/lift_proto.db;AUTO_SERVER=TRUE for "JDBC URL," adjusting the path for the location of your database, and adjusting the name of the database (lift_proto.db) if different in your Boot.scala.

Press "Connect" to view and edit your database.

The default Lift projects that include a database, such as lift_basic, use the H2 relational database, as it can be included as an SBT dependency and requires no external installation or configuration. It’s a fine product, although production deployments typically use standalone databases, such as PostgreSQL or MySQL.

Even if you’re deploying to a non–H2 database, it may be useful to keep H2 around because it has an in-memory mode, which is great for unit tests. This means you can create a database in memory, and throw it away when your unit test ends.

If you don’t like the web interface, the connection settings described in this recipe should give you the information you need to configure other SQL tools.

The H2 site lists the features and configuration options for database engine.

If you’re using the console frequently, consider making it accessible from your Lift application in development node. This is described by Diego Medina in a blog post.

The example Lift project for Relational Database Persistence with Record and Squeryl has the H2 console enabled.

You need to make two changes to your build.sbt file. First, reference the snapshot repository:

resolvers+="snapshots"at"http://oss.sonatype.org/content/repositories/snapshots"

Second, change the liftVersion in your build to be the latest version. For this example, let’s use the 3.0 snapshot version of Lift:

valliftVersion="3.0-SNAPSHOT"

Restarting SBT (or issuing a reload command) will trigger a download

of the latest build.

Production releases of Lift (e.g., 2.5, 2.6), as well as milestone releases (e.g., 2.6-M3) and release candidates (e.g., 2.6-RC1) are published into a releases repository. When SBT downloads them, they are downloaded once.

Snapshot releases are different: they are the result of an automated

build, and change often. You can force SBT to resolve the latest

versions by running the command clean and then update.

To learn the detail of snapshot versions, dig into the Maven Complete Reference.

A new Scala version has just been released and you want to immediately use it in your Lift project.

Providing that the Scala version is binary compatible with the latest version used by Lift, you can change your build file to use the latest version of Scala.

For example, let’s assume your build.sbt file is set up to use Lift 2.6 with Scala 2.11.1:

scalaVersion:="2.11.1"libraryDependencies++={valliftVersion="2.6"Seq("net.liftweb"%%"lift-webkit"%liftVersion%"compile->default")}

To use Scala 2.11.2, just change the value in scalaVersion.

Dependencies have a particular naming convention. For example, the lift-webkit library for Lift 2.6 is called lift-webkit_2.11-2.6.jar. Normally, in build.sbt we simply refer to "net.liftweb" %% "lift-webkit", and SBT turns that into the name of a file that can be downloaded.

If for any reason you need to control the dependency downloaded, you can drop the %% and use % instead:

scalaVersion:="2.11.2"libraryDependencies++={valliftVersion="2.6"Seq("net.liftweb"%"lift-webkit_2.11"%liftVersion%"compile->default")}

What we’ve done here is explicitly specified we want the 2.11 Scala version for Lift. This is the difference between using %% and % in a

dependency: with %% you do not specify the Scala version, as SBT will append

the scalaVersion number automatically; with % this automatic change is not made,

so we have to manually specify more details for the name of the library.

Please note this only works for minor releases of Scala: major releases break compatibility. For example, Scala 2.10.1 is compatible with Scala 2.10.2 but not 2.11.

Binary compatibility in Scala is discussed on the Scala user mailing list.

The SBT Library Dependencies page describes how SBT manages version numbers.

Using the Latest Lift Build describes how to use a snapshot version of Lift.

Generating HTML is often a major component of web applications. This chapter is concerned with Lift’s View First approach and use of CSS Selectors. Later chapters focus more specifically on form processing, REST web services, JavaScript, Ajax, and Comet.

Code for this chapter is at https://github.com/LiftCookbook/cookbook_html.

You can use the Scala REPL to run your CSS selectors.

Here’s an example where we test out a CSS selector that adds an href attribute to a link.

Start from within SBT and use the console command to get into the REPL:

> console [info] Starting scala interpreter... [info] Welcome to Scala version 2.9.1.final Type in expressions to have them evaluated. Type :help for more information.

scala>importnet.liftweb.util.Helpers._importnet.liftweb.util.Helpers._scala>valf="a [href]"#>"http://example.org"f:net.liftweb.util.CssSel=(Full(a[href]),Full(ElemSelector(a,Full(AttrSubNode(href)))))scala>valin=<a>clickme</a>in:scala.xml.Elem=<a>clickme</a>scala>f(in)res0:scala.xml.NodeSeq=NodeSeq(<ahref="http://example.org">clickme</a>)

The Helpers._ import brings in the CSS selector functionality, which we then exercise by creating a selector, f, calling it with a very simple template, in, and observing the result, res0.

CSS selector transforms are one of the distinguishing features of Lift. They succinctly describe a node in your template (lefthand side) and give a replacement (operation, the righthand side). They do take a little while to get used to, so being able to test them at the Scala REPL is useful.

It may help to know that prior to CSS selectors, Lift snippets were typically defined in terms

of a function that took a NodeSeq and returned a NodeSeq, often via a method called bind. Lift would take your template, which would be the input NodeSeq, apply the function, and return a new NodeSeq. You won’t see that usage so often anymore, but the principle is the same.

The CSS selector functionality in Lift gives you a CssSel function,

which is NodeSeq => NodeSeq. We exploit this in the previous example by constructing an input

NodeSeq (called in), then creating a CSS function (called f). Because we know that CssSel

is defined as a NodeSeq => NodeSeq, the natural way to execute the selector is to supply

the in as a parameter, and this gives us the answer, res0.

If you use an IDE that supports a worksheet, which both Eclipse and IntelliJ IDEA do, then you can also run transformations in a worksheet.

The syntax for selectors is best described in Simply Lift.

See Developing Using Eclipse and Developing Using IntelliJ IDEA for how to work with Eclipse and IntelliJ IDEA.

You want your CSS selector binding to apply to the results of earlier binding expressions.

Use andThen rather than & to compose your selector expressions.

For example, suppose we want to replace <div id="foo"/> with

<div id="bar">bar content</div> but for some reason we need to

generate the bar div as a separate step in the selector expression:

sbt> console [info] Starting scala interpreter... [info] Welcome to Scala version 2.9.1.final (Java 1.7.0_05). Type in expressions to have them evaluated. Type :help for more information.

scala>importnet.liftweb.util.Helpers._importnet.liftweb.util.Helpers._scala>defrender="#foo"#><divid="bar"/>andThen"#bar *"#>"bar content"render:scala.xml.NodeSeq=>scala.xml.NodeSeqscala>render(<divid="foo"/>)res0:scala.xml.NodeSeq=NodeSeq(<divid="bar">barcontent</div>)

When using &, think of the CSS selectors as always applying to the

original template, no matter what other expressions you are combining.

This is because & is aggregating the selectors together before applying them. In contrast, andThen is

a method of all Scala functions that composes two functions together, with the first being

called before the second.

Compare the previous example if we change the andThen to

&:

scala>defrender="#foo"#><divid="bar"/>&"#bar *"#>"bar content"render:net.liftweb.util.CssSelscala>render(<divid="foo"/>)res1:scala.xml.NodeSeq=NodeSeq(<divid="bar"></div>)

The second expression will not match, as it is applied to the original

input of <div id="foo"/>—the selector of #bar won’t match on id=foo,

and so adds nothing to the results of render.

The Lift wiki page for CSS selectors also describes this use of andThen.

You want to set the content of an HTML meta tag from a snippet.

Use the @ CSS binding name selector. For example, given:

<metaname="keywords"content="words, here, please"/>

The following snippet code will update the value of the content attribute:

"@keywords [content]"#>"words, we, really, want"

The @ selector selects all elements with the given name. It’s useful in this case to change the <meta name="keyword"> tag, but you may also see it used elsewhere. For example, in an HTML form, you can select input fields such as <input name="address"> with "@address".

The [content] part is an example of a replacement rule that can follow a selector. That’s to say, it’s not specific to the @ selector and can be used with other selectors. In this example, it replaces the value of the attribute called "content." If the meta tag had no "content" attribute, it would be added.

There are two other replacement rules useful for manipulating attributes:

[content!] to remove an attribute with a matching value.

[content+] to append to the value.

Examples of these would be:

scala>importnet.liftweb.util.Helpers._importnet.liftweb.util.Helpers._scala>valin=<metaname="keywords"content="words, here, please"/>in:scala.xml.Elem=<metaname="keywords"content="words, here, please"></meta>scala>valremove="@keywords [content!]"#>"words, here, please"remove:net.liftweb.util.CssSel=CssBind(Full(@keywords[content!]),Full(NameSelector(keywords,Full(AttrRemoveSubNode(content)))))scala>remove(in)res0:scala.xml.NodeSeq=NodeSeq(<metaname="keywords"></meta>)

and:

scala>valadd="@keywords [content+]"#>", thank you"add:net.liftweb.util.CssSel=CssBind(Full(@keywords[content+]),Full(NameSelector(keywords,Full(AttrAppendSubNode(content)))))scala>add(in)res1:scala.xml.NodeSeq=NodeSeq(<metacontent="words, here, please, thank you"name="keywords"></meta>)

Although not directly relevant to meta tags, you should be aware that there is one convenient special case for appending to an attribute. If the attribute is class, a space is added together with your class value. As a demonstration of that, here’s an example of appending a class called btn-primary to a div:

scala>defrender="div [class+]"#>"btn-primary"render:net.liftweb.util.CssSelscala>render(<divclass="btn"/>)res0:scala.xml.NodeSeq=NodeSeq(<divclass="btn btn-primary"></div>)

The syntax for selectors is best described in Simply Lift.

See Testing and Debugging CSS Selectors for how to run selectors from the REPL.

You want to set the <title> of the page from a Lift snippet.

Select the content of the title element and replace it with the

text you want:

"title *"#>"I am different"

Assuming you have a <title> tag in your template, this will

result in:

<title>I am different</title>

This example uses an element selector, which picks out tags in the HTML template and replaces the content. Notice that we are using "title *" to select the content of the title tag. If we had left off the *, the entire title tag would have been replaced with text.

As an alternative, it is also possible to set the page title from the contents of SiteMap,

meaning the title used will be the title you’ve assigned to the page in

the site map. To do that, make use of Menu.title in your template directly:

<titledata-lift="Menu.title"></title>

The Menu.title code appends to any existing text in the title.

This means the following will have the phrase "Site Title - " in the

title, followed by the page title:

<titledata-lift="Menu.title">Site Title -</title>

If you need more control, you can of course bind on <title> using a

regular snippet. This example uses a custom snippet to put the site

title after the page title:

<titledata-lift="MyTitle"></title>

objectMyTitle{defrender=<title><lift:Menu.title/>-SiteTitle</title>}

Notice that our snippet is returning another snippet, <lift:Menu.title/>. This is a perfectly normal thing to do in Lift, and snippet invocations returned from snippets will be processed by Lift as normal.

Snippet Not Found When Using HTML5 describes the different ways to reference a snippet, such as data-lift and <lift: ... />.

At the Assembla website, there’s more about SiteMap and the Menu snippets.

You want to make use of Internet Explorer HTML conditional comments in your templates.

Put the markup in a snippet and include the snippet in your page or template.

For example, suppose we want to include the HTML5 Shiv (a.k.a. HTML5 Shim) JavaScript so we can use HTML5 elements with legacy IE browsers. To do that, our snippet would be:

packagecode.snippetimportscala.xml.UnparsedobjectHtml5Shiv{defrender=Unparsed("""<!--[if lt IE 9]><script src="http://html5shim.googlecode.com/svn/trunk/html5.js"></script><![endif]-->""")}

We would then reference the snippet in the <head> of a page, perhaps even in

all pages via templates-hidden/default.html:

<scriptdata-lift="Html5Shiv"></script>

The HTML5 parser used by Lift does not carry comments from the source through to the rendered page. If you just tried to paste the html5shim markup into your template you’d find it missing from the rendered page.

We deal with this by generating unparsed markup from a snippet. If you’re looking at

Unparsed and are worried, your instincts are correct. Normally, Lift would cause the

markup to be escaped, but in this case, we really do want

unparsed XML content (the comment tag) included in the output.

If you find you’re using IE conditional comments frequently, you may want to create a more general version of the snippet. For example:

packagecode.snippetimportxml.{NodeSeq,Unparsed}importnet.liftweb.http.SobjectIEOnly{privatedefcondition:String=S.attr("cond")openOr"IE"defrender(ns:NodeSeq):NodeSeq=Unparsed("<!--[if "+condition+"]>")++ns++Unparsed("<![endif]-->")}

It would be used like this:

<divdata-lift="IEOnly">A div just for IE</div>

and produces output like this:

<!--[if IE]><div>A div just for IE</div><![endif]-->

Notice that the condition test defaults to IE, but first tries to look for an attribute called cond. This allows you to write:

<divdata-lift="IEOnly?cond=lt+IE+9">You're using IE 8 or earlier</div>

The + symbol is the URL encoding for a space, resulting in:

<!--[if lt IE 9]><div>You're using IE 8 or earlier</div><![endif]-->

The IEOnly example is derived from a posting on the mailing list from Antonio Salazar Cardozo.

The html5shim project can be downloaded from its Google Code site.

You want a snippet to return the original markup associated with the snippet invocation.

Use the PassThru transform.

Suppose you have a snippet that performs a transform when some condition is met, but if the condition is not met, you want the snippet to return the original markup.

Starting with the original markup:

<h2>Pass Thru Example</h2><p>There's a 50:50 chance of seeing "Try again" or "Congratulations!":</p><divdata-lift="PassThruSnippet">Try again - this is the template content.</div>

We could leave it alone or change it with this snippet:

packagecode.snippetimportnet.liftweb.util.Helpers._importnet.liftweb.util.PassThruimportscala.util.Randomimportxml.TextclassPassThruSnippet{privatedeffiftyFifty=Random.nextBooleandefrender=if(fiftyFifty)"*"#>Text("Congratulations! The content was changed")elsePassThru}

PassThru is an identity function of type NodeSeq => NodeSeq. It returns the input it

is given:

objectPassThruextendsFunction1[NodeSeq,NodeSeq]{defapply(in:NodeSeq):NodeSeq=in}

A related example is ClearNodes, defined as:

objectClearNodesextendsFunction1[NodeSeq,NodeSeq]{defapply(in:NodeSeq):NodeSeq=NodeSeq.Empty}

The pattern of converting one NodeSeq to another is simple, but also powerful enough to get you out of most situations, as you can always arbitrarily rewrite the NodeSeq.

You’re using Lift with the HTML5 parser and one of your snippets is rendering with a "Class Not

Found" error. It even happens for <lift:HelloWorld.howdy />.

Switch to the designer-friendly snippet invocation mechanism. For example:

<divdata-lift="HellowWorld.howdy"></div>

In this Cookbook, we use the HTML5 parser, which is set in Boot.scala:

// Use HTML5 for renderingLiftRules.htmlProperties.default.set((r:Req)=>newHtml5Properties(r.userAgent))

The HTML5 parser and the traditional Lift XHTML parser have different

behaviours. In particular, the HTML5 parser converts elements and attribute names to lowercase when looking up snippets. This means Lift would take <lift:HelloWorld.howdy /> and look for a class called helloworld rather than HelloWorld, which would be the cause of the "Class Not Found" error.

Switching to the designer-friendly mechanism is the solution here, and you gain validating HTML as a bonus.

There are four popular ways of referencing a snippet:

data-lift="MySnippet"This is the style we use in this book, and is valid HTML5 markup.

class="lift:MySnippet"Also valid HTML5, but you must include the "lift" prefix for Lift to recognise this as a snippet.

lift attribute, as in: lift="MySnippet"This won’t strictly validate against HTML5, but you may see it used.

<lift:MySnippet />You’ll see the usage of this tag in templates declining because of the way it interacts with the HTML5 parser. However, it works just fine outside of a template, for example when embedding a snippet invocation in your server-side code (Setting the Page Title includes an example of this for Menu.title).

The key differences between the XHTML and HTML5 parsers are outlined on the mailing list.

You’ve modified CSS or JavaScript in your application, but web browsers have cached your resources and are using the older versions. You’d like to avoid this browser caching.

Add the with-resource-id attribute to script or link tags:

<scriptdata-lift="with-resource-id"src="/myscript.js"type="text/javascript"></script>

The addition of this attribute will cause Lift to append a resource ID to

your src (or href), and as this resource ID changes each time Lift

starts, it defeats browser caching.

The resultant HTML might be:

<scriptsrc="/myscript.js?F619732897824GUCAAN=_"type="text/javascript"></script>

The random value that is appended to the resource is computed when your Lift application boots. This means it should be stable between releases of your application.

If you need some other behaviour from with-resource-id, you can assign

a new function of type String => String to

LiftRules.attachResourceId. The default implementation, shown previously,

takes the resource name, /myscript.js in the example, and returns the

resource name with an ID appended.

You can also wrap a number of tags inside a

<lift:with-resource-id>...<lift:with-resource-id> block. However,

avoid doing this in the <head> of your page, as the HTML5 parser will

move the tags to be outside of the head section.

Note that some proxies may choose not to cache resources with query parameters at all. If that impacts you, it’s possible to code a custom resource ID method to move the random resource ID out of the query parameter and into the path.

Here’s one approach to doing this. Rather than generate JavaScript and CSS links that look like /assets/style.css?F61973, we will generate /cache/F61973/assets/style.css. We will then will need to tell our container or web server to take requests that look like this new format, and remove the /cache/F61973/ part.

The code to change the way links are created:

packagecode.libimportnet.liftweb.util._importnet.liftweb.http._objectCustomResourceId{definit():Unit={// The random number we're using to avoid cachingvalresourceId=Helpers.nextFuncName// Prefix with-resource-id links with "/cache/{resouceId}"LiftRules.attachResourceId=(path:String)=>{"/cache/"+resourceId+path}}}

This would be initialised in Boot.scala:

CustomResourceId.init()

or you could just paste all the code into Boot.scala, if you prefer.

With the code in place, we can, for example, modify templates-hidden/default.html and add a resource ID class to jQuery:

<scriptid="jquery"data-lift="with-resource-id"src="/classpath/jquery.js"type="text/javascript"></script>

At runtime, this would be rendered in HTML as:

<scripttype="text/javascript"id="jquery"src="/cache/F352555437877UHCNRW/classpath/jquery.js"></script>

Finally we need a way to rewrite URLs like this back to the original path. That is, remove the /cache/… part. There are a few ways to achieve this. If you’re using nginx or Apache in front of your Lift application, you can configure those web servers to perform the rewrite before it reaches Lift.

http://bit.ly/14BfNYJ shows the default implementation of attachResourceId.

Google’s "Optimize caching" notes are a good source of information about browser behaviour.

There is support for URL rewriting, described on the Lift wiki. Rewriting is used rarely, and only for special cases. It’s not suitable for this recpie, as outlined in a posting to Stackoverflow. Many problems that look like rewriting problems are better solved with a Menu Param.

Grunt and similar tools can modify paths. Diego Medina’s post on Using Grunt and Bower with Lift is a good starting point.

You use a template for layout, but on one specific page you need to add

something to the <head> section.

Use the head snippet so Lift knows to merge the

contents with the <head> of your page. For example, suppose you have

the following contents in templates-hidden/default.html:

<htmllang="en"xmlns:lift="http://liftweb.net/"><head><metacharset="utf-8"></meta><titledata-lift="Menu.title">App:</title><scriptid="jquery"src="/classpath/jquery.js"type="text/javascript"></script><scriptid="json"src="/classpath/json.js"type="text/javascript"></script></head><body><divid="content">The main content will get bound here</div></body></html>

Also suppose you have index.html on which you want to include red-titles.css to change the style of just this page.

Do so by including the CSS in the part of the page that will get processed, and mark it with the head snippet:

<!DOCTYPE html><html><head><title>Special CSS</title></head><bodydata-lift-content-id="main"><divid="main"data-lift="surround?with=default;at=content"><linkdata-lift="head"rel="stylesheet"href="red-titles.css"type="text/css"/><h2>Hello</h2></div></body></html>

Note that this index.html page is validated HTML5, and will produce a

result with the custom CSS inside the <head> tag, something like this:

<!DOCTYPE html><htmllang="en"><head><metacharset="utf-8"><title>App: Special CSS</title><scripttype="text/javascript"src="/classpath/jquery.js"id="jquery"></script><scripttype="text/javascript"src="/classpath/json.js"id="json"></script><linkrel="stylesheet"href="red-titles.css"type="text/css"></head><body><divid="main"><h2>Hello</h2></div><scripttype="text/javascript"src="/ajax_request/liftAjax.js"></script><scripttype="text/javascript">// <![CDATA[varlift_page="F557573613430HI02U4";// ]]></script></body></html>

If you find your tags not appearing in the <head> section, check that

the HTML in your template and page is valid HTML5.

You can also use <lift:head>...</lift:head> to wrap a number of

expressions, and will see <head_merge>...</head_merge> used in the code

example as an alternative to <lift:head>.

Another variant you may see is class="lift:head", as an alternative to data-lift="head".

The head snippet is a built-in snippet, but otherwise no different from any snippet you might write. What the snippet does is emit a <head> block, containing the elements you want in the head. These can be <title>, <link>, <meta>, <style>, <script>, or <base> tags. How does this <head> block produced by the head snippet end up inside the main <head> section of the page? When Lift processes your template, it automatically merges all <head> tags into the main <head> section of the page.

You might suspect you can therefore put a plain old <head> section anywhere on your template. You can, but that would not necessarily be valid HTML5 markup.

There’s also tail, which works in a similar way, except anything marked with this snippet is moved to be just before the close of the body tag.

Move JavaScript to End of Page describes how to move JavaScript to the end of the page with the tail snippet.

The W3C HTML validator is a useful tool for tracking down HTML markup issues that may cause problems with content being moved into the head of your page.

In Boot.scala, add the following:

importnet.liftweb.util._importnet.liftweb.http._LiftRules.uriNotFound.prepend(NamedPF("404handler"){case(req,failure)=>NotFoundAsTemplate(ParsePath(List("404"),"html",true,false))})

The file src/main/webapp/404.html will now be served for requests to unknown resources.

The uriNotFound Lift rule needs to return a NotFound in reply to a

Req and Box[Failure]. This allows you to customise the

response based on the request and the type of failure.

There are three types of NotFound:

NotFoundAsTemplateUseful to invoke the Lift template processing

facilities from a ParsePath

NotFoundAsResponseAllows you to return a specific LiftResponse

NotFoundAsNodeWraps a NodeSeq for Lift to translate into a 404

response

In the example, we’re matching any not found situation, regardless of the request and the failure, and evaluating

this as a resource identified by ParsePath. The path we’ve used is /404.html.

In case you’re wondering, the last two true and false arguments to ParsePath

indicate the path we’ve given is absolute and doesn’t end in a

slash. ParsePath is a representation for a URI path, and exposing

if the path is absolute or ends in a slash are useful flags for matching on, but

in this case, they’re not relevant.

Be aware that 404 pages, when rendered this way, won’t have a location in the site map. That’s because we’ve not included the 404.html file in the site map, and we don’t have to, because we’re rendering via NotFoundAsTemplate rather than sending a redirect to /404.html. However, this means that if you display an error page using a template that contains Menu.builder or similar (as templates-hidden/default.html does), you’ll see "No Navigation Defined." In that case, you’ll probably want to use a different template on your 404 page.

As an alternative, you could include the 404 page in your site map but make it hidden when the site map is displayed via the Menu.builder:

Menu.i("404")/"404">>Hidden

Catch Any Exception shows how to catch any exception thrown from your code.

You want to show a customised page for certain HTTP status codes.

Use LiftRules.responseTransformers to match against the response and

supply an alternative.

As an example, suppose we want to provide a custom page for 403 ("Forbidden") statuses created in our Lift application. Further, suppose that this page might contain snippets so will need to pass through the Lift rendering flow.

To do this in Boot.scala, we define the LiftResponse we want to generate

and use the response when a 403 status is about to be produced by Lift:

defmy403:Box[LiftResponse]=for{session<-S.sessionreq<-S.requesttemplate=Templates("403"::Nil)response<-session.processTemplate(template,req,req.path,403)}yieldresponseLiftRules.responseTransformers.append{caserespifresp.toResponse.code==403=>my403openOrrespcaseresp=>resp}

The file src/main/webapp/403.html will now be served for requests that generate 403 status codes. Other non–403 responses are left untouched.

LiftRules.responseTransformers allows you to supply

LiftResponse => LiftResponse functions to change a response right at the end

of the HTTP processing cycle. This is a very general mechanism: in this

example, we are matching on a status code, but we could match on anything

exposed by LiftResponse.

In the recipe, we respond with a template, but you may find

situations where other kinds of responses make sense, such as an InMemoryResponse.

You could even simplify the example to just this:

LiftRules.responseTransformers.append{caserespifresp.toResponse.code==403=>RedirectResponse("/403.html")caseresp=>resp}

In Lift 3, responseTransformers will be modified to be a partial function, meaning you’ll be able to leave off the final case resp => resp part of this example.

That redirect will work just fine, with the only downside that the HTTP status code sent back to the web browser won’t be a 403 code.

A more general approach, if you’re customising a number of pages, would be to define the status codes you want to customise, create a page for each, and then match only on those pages:

LiftRules.responseTransformers.append{caseCustomised(resp)=>respcaseresp=>resp}objectCustomised{// The pages we have customised: 403.html and 500.htmlvaldefinedPages=403::500::Nildefunapply(resp:LiftResponse):Option[LiftResponse]=definedPages.find(_==resp.toResponse.code).flatMap(toResponse)

deftoResponse(status:Int):Box[LiftResponse]=for{session<-S.sessionreq<-S.requesttemplate=Templates(status.toString::Nil)response<-session.processTemplate(template,req,req.path,status)}yieldresponse}

The convention in Customised is that we have an HTML file in src/main/webapp that matches

the status code we want to show, but of course you can change that by using a different

pattern in the argument to Templates.

One way to test the previous examples is to add the following to Boot.scala to make all requests to /secret return a 403:

valProtected=If(()=>false,()=>ForbiddenResponse("No!"))valentries=List(Menu.i("Home")/"index",Menu.i("secret")/"secret">>Protected,// rest of your site map here...)

If you request /secret, a 403 response will be triggered, which will match the response transformer showing you the contents of the 403.html template.

Custom 404 Page explains the built-in support for custom 404 messages.

Catch Any Exception shows how to catch any exception thrown from your code.

You want to include a clickable link in your S.error, S.notice, or

S.warning messages.

Include a NodeSeq containing a link in your notice:

S.error("checkPrivacyPolicy",<span>Seeour<ahref="/policy">privacypolicy</a></span>)

You might pair this with the following in your template:

<spandata-lift="Msg?id=checkPrivacyPolicy"></span>

You may be more familiar with the S.error(String) signature of Lift notices than the versions

that take a NodeSeq as an argument, but the String versions just convert the String argument

to a scala.xml.Text kind of NodeSeq.

Lift notices are described on the wiki.

You want a button or a link that, when clicked, will trigger a download in the browser rather than visiting a page.

Create a link using SHtml.link, provide a function to return a LiftResponse, and wrap the response in a ResponseShortcutException.

As an example, we will create a snippet that shows the user a poem and provides a link to download the poem as a text file. The template for this snippet will present each line of the poem separated by a <br>:

<h1>A poem</h1><divdata-lift="DownloadLink"><blockquote><spanclass="poem"><spanclass="line">line goes here</span><br/></span></blockquote><ahref="">download link here</a></div>

The snippet itself will render the poem and replace the download link with one that will send a response that the browser will interpret as a file to download:

packagecode.snippetimportnet.liftweb.util.Helpers._importnet.liftweb.http._importxml.TextclassDownloadLink{

valpoem="Roses are red,"::"Violets are blue,"::"Lift rocks!"::"And so do you."::Nildefrender=".poem"#>poem.map(line=>".line"#>line)&"a"#>downloadLinkdefdownloadLink=SHtml.link("/notused",()=>thrownewResponseShortcutException(poemTextFile),Text("Download"))defpoemTextFile:LiftResponse=InMemoryResponse(poem.mkString("\n").getBytes("UTF-8"),"Content-Type"->"text/plain; charset=utf8"::"Content-Disposition"->"attachment; filename=\"poem.txt\""::Nil,cookies=Nil,200)}

Recall that SHtml.link generates a link and executes a function you supply before following the link.

The trick here is that wrapping the LiftResponse in a ResponseShortcutException will indicate

to Lift that the response is complete, so the page being linked to (in this case, notused) won’t be processed. The browser is happy: it has a response to the link the user clicked on, and will render it how it wants to, which in this case will probably be by saving the file to disk.

SHtml.link works by generating a URL that Lift associates with the function you give it. On a page called downloadlink, the URL will look something like:

downloadlink?F845451240716XSXE3G=_#notused

When that link is followed, Lift looks up the function and executes it, before processing the linked-to resource. However, in this case, we are shortcutting the Lift pipeline by throwing this particular exception. This is caught by Lift, and the response wrapped by the exception is taken as the final response from the request.

This shortcutting is used by S.redirectTo via ResponseShortcutException.redirect. This companion object also defines shortcutResponse, which you can use like this:

importnet.liftweb.http.ResponseShortcutException._defdownloadLink=SHtml.link("/notused",()=>{S.notice("The file was downloaded")throwshortcutResponse(poemTextFile)},Text("Download"))

We’ve included an S.notice to highlight that throw shortcutResponse will process Lift notices when the page next loads, whereas throw new ResponseShortcutException does not. In this case, the notice will not appear when the user downloads the file, but it will be included the next time notices are shown, such as when the user navigates to another page. For many situations, the difference is immaterial.

This recipe has used Lift’s stateful features. You can see how useful it is to be able to close over state (the poem), and offer the data for download from memory. If you’ve created a report from a database, you can offer it as a download without having to regenerate the items from the database.

However, in other situations you might want to avoid holding this data as a function on a link. In that case, you’ll want to create a REST service that returns a LiftResponse.

REST looks at REST-based services in Lift.

Streaming Content discusses InMemoryResponse and similar responses to return content to the browser.

For reports, the Apache POI project includes libraries for generating Excel files; and OpenCSV is a library for generating CSV files.

Supply a mock request to Lift’s MockWeb.testReq, and run your test in the context of the Req supplied by testReq.

The first step is to add Lift’s Test Kit as a dependency to your project in build.sbt:

libraryDependencies+="net.liftweb"%%"lift-testkit"%"2.5"%"test"

To demonstrate how to use testReq, we will test a function that detects a Google crawler. Google identifies

crawlers via various User-Agent header values, so the function we want to test would look like this:

packagecode.libimportnet.liftweb.http.ReqobjectRobotDetector{valbotNames="Googlebot"::"Mediapartners-Google"::"AdsBot-Google"::Nildefknown_?(ua:String)=botNames.exists(uacontains_)defgooglebot_?(r:Req):Boolean=r.header("User-Agent").exists(known_?)}

We have the list of magic botNames that Google sends as a user agent, and we define a check, known_?, that takes the user agent string and looks to see if any robot satisfies the condition of being contained in that user agent string.

The googlebot_? method is given a Lift Req object, and from this, we look up the header. This evaluates to a Box[String], as it’s possible there is no header. We find the answer by seeing if there exists in the Box a value that satisfies the known_? condition.

To test this, we create a user agent string, prepare a MockHttpServletRequest with the header, and use Lift’s MockWeb to turn the low-level request into a Lift Req for us to test with:

packagecode.libimportorg.specs2.mutable._importnet.liftweb.mocks.MockHttpServletRequestimportnet.liftweb.mockweb.MockWebclassSingleRobotDetectorSpecextendsSpecification{"Google Bot Detector"should{"spot a web crawler"in{valuserAgent="Mozilla/5.0 (compatible; Googlebot/2.1)"// Mock a request with the right header:valhttp=newMockHttpServletRequest()http.headers=Map("User-Agent"->List(userAgent))// Test with a Lift Req:MockWeb.testReq(http){r=>RobotDetector.googlebot_?(r)mustbeTrue}}}}

Running this from SBT with the test command would produce:

[info] SingleRobotDetectorSpec [info] [info] Google Bot Detector should [info] + spot a web crawler [info] [info] Total for specification SingleRobotDetectorSpec [info] Finished in 18 ms [info] 1 example, 0 failure, 0 error

Although MockWeb.testReq is handling the creation of a Req for us, the environment for that Req is supplied by the MockHttpServletRequest. To configure a request, create an instance of the mock and mutate the state of it as required before using it with testReq.

Aside from HTTP headers, you can set cookies, content type, query parameters, the HTTP method, authentication type, and the body. There are variations on the body assignment, which conveniently set the content type depending on the value you assign:

JValue will use a content type of application/json.

NodeSeq will use text/xml (or you can supply an alternative).

String uses text/plain (unless you supply an alternative).

Array[Byte] does not set the content type.

In the example test shown earlier, it would be tedious to have to set up the same code repeatedly for different user agents. Specs2's Data Table provides a compact way to run different example values through the same test:

packagecode.libimportorg.specs2._importmatcher._importnet.liftweb.mocks.MockHttpServletRequestimportnet.liftweb.mockweb.MockWebclassRobotDetectorSpecextendsSpecificationwithDataTables{defis="Can detect Google robots"^{"Bot?"||"User Agent"|true!!"Mozilla/5.0 (Googlebot/2.1)"|true!!"Googlebot-Video/1.0"|true!!"Mediapartners-Google"|true!!"AdsBot-Google"|false!!"Mozilla/5.0 (KHTML, like Gecko)"|>{(expectedResult,userAgent)=>{valhttp=newMockHttpServletRequest()http.headers=Map("User-Agent"->List(userAgent))MockWeb.testReq(http){r=>RobotDetector.googlebot_?(r)must_==expectedResult}}}}}

The core of this test is essentially unchanged: we create a mock, set the user agent, and check the result of googlebot_?. The difference is that Specs2 is providing a neat way to list

out the various scenarios and pipe them through a function.

The output from running this under SBT would be:

[info] Can detect Google robots [info] + Bot? | User Agent [info] true | Mozilla/5.0 (Googlebot/2.1) [info] true | Googlebot-Video/1.0 [info] true | Mediapartners-Google [info] true | AdsBot-Google [info] false | Mozilla/5.0 (KHTML, like Gecko) [info] [info] Total for specification RobotDetectorSpec [info] Finished in 1 ms [info] 1 example, 0 failure, 0 error

Although the expected value appears first in our table, there’s no requirement to put it first.

The Lift wiki discusses this topic and also other approaches such as testing with Selenium.

Install the Lift Textile module in your build.sbt file by adding the following to the list of dependencies:

"net.liftmodules"%%"textile_2.5"%"1.3"

You can then use the module to render Textile using the toHtml method.

For example, starting SBT after adding the module and running the SBT console command allows you to try out the module:

scala>importnet.liftmodules.textile._importnet.liftmodules.textile._scala>TextileParser.toHtml("""| h1. Hi!|| The module in "Lift":http://www.liftweb.net for turning Textile markup| into HTML is pretty easy to use.|| * As you can see.| * In this example.| """)res0:scala.xml.NodeSeq=NodeSeq(,<h1>Hi!</h1>,

,<p>The module in<ahref="http://www.liftweb.net">Lift</a>for turning Textile markup<br></br>into HTML is pretty easy to use.</p>, ,<ul><li>As you can see.</li><li>In this example.</li></ul>, , )

It’s a little easier to see the output if we pretty print it:

scala>valpp=newPrettyPrinter(width=35,step=2)pp:scala.xml.PrettyPrinter=scala.xml.PrettyPrinter@54c19de8scala>pp.formatNodes(res0)res1:String=

<h1>Hi!</h1><p>The module in<ahref="http://www.liftweb.net">Lift</a>for turning Textile markup<br></br>into HTML is pretty easy to use.</p><ul><li>As you can see.</li><li>In this example.</li></ul>

There’s nothing special code has to do to become a Lift module, although there are common conventions: they typically are packaged as net.liftmodules, but don’t have to be; they usually depend on a version of Lift; they sometimes use the hooks provided by LiftRules to provide a particular behaviour. Anyone can create and publish a Lift module, and anyone can contribute to existing modules. In the end, they are declared as dependencies in SBT, and pulled into your code just like any other dependency.

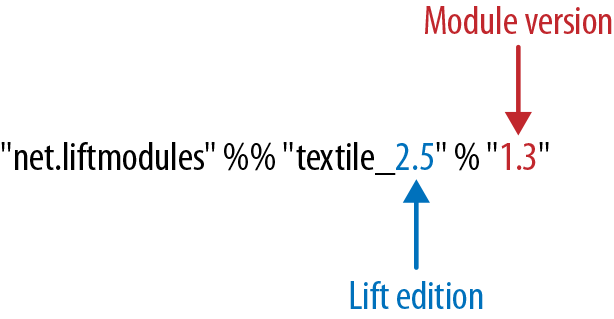

The dependency name is made up of two elements: the name and the "edition" of Lift that the module is compatible with, as shown in Figure 2-1. By "edition" we just mean the first part of the Lift version number. A "2.5" edition implies the module is compatible with any Lift release that starts "2.5."

This structure has been adopted because modules have their own release cycle, independent of Lift. However, modules may also depend on certain features of Lift, and Lift may change APIs between major releases, hence the need to use part of the Lift version number to identify the module.

There’s no real specification of what Textile is, but there are references available that cover the typical kinds of markup to enter and what HTML you can expect to see.

The unit tests for the Textile module give you a good set of examples of what is supported.

Sharing Code in Modules describes how to create modules.

This chapter looks at how to process form data with Lift: submitting forms, working with form elements. The end result of a form submission can be records being updated in a database, so you may also find Relational Database Persistence with Record and Squeryl or MongoDB Persistence with Record useful, as they discuss relational databases and MongoDB, respectively.

To the extent that form processing is passing data to a server, there are also recipes in JavaScript, Ajax, and Comet that are relevant to form processing.

You’ll find many of the examples from this chapter as source code at https://github.com/LiftCookbook/cookbook_forms.

You want to process form data in a regular, old-fashioned, non-Ajax way.

Extract form values with S.param, process the values, and produce some output.

For example, we can show a form, process an input value, and give a message back as a notice. The template is a regular HTML form, with the addition of a snippet:

<formdata-lift="Plain"action="/plain"method="post"><inputtype="text"name="name"placeholder="What's your name?"><inputtype="submit"value="Go"></form>

In the snippet, we can pick out the value of the field name with S.param("name"):

packagecode.snippetimportnet.liftweb.common.Fullimportnet.liftweb.http.Simportnet.liftweb.util.PassThruobjectPlain{defrender=S.param("name")match{caseFull(name)=>S.notice("Hello "+name)S.redirectTo("/plain")case_=>PassThru}}

The first time through this snippet, there will be no parameter, so we just pass back the form unchanged to the browser, which is what PassThru is doing. You can then enter a value into the name field and submit the form. This will result in Lift processing the template again, but this time, with a value for the name input. The result will be your browser redirected to a page with a message set for display.

Manually plucking parameters from a request isn’t making the best use of Lift, but sometimes you need to do it, and S.param is the way you can process request parameters.

The result of S.param is a Box[String], and in the previous example, we pattern match on this value. With more than one parameter, you’ve probably seen S.param used in this way:

defrender={for{name<-S.param("name")pet<-S.param("petName")}{S.notice("Hello %s and %s".format(name,pet))S.redirectTo("/plain")}PassThru}

If both name and petName are provided, the body of the for will be evaluated.

Related functions on S include:

S.params(name)Produces a List[String] for all the request parameters with the given name

S.post_? and S.get_?Tells you if the request was a GET or POST

S.getRequestHeader(name)Gives the Box[String] for a header in the request with the given name

S.requestAccesses the Box[Req], which gives you access to further HTTP-specific information about the request

As an example of using S.request, we could produce a List[String] for the values of all request parameters that have name somewhere in their parameter name:

valnames=for{req<-S.request.toListparamName<-req.paramNamesifparamName.toLowerCasecontains"name"value<-S.param(paramName)}yieldvalue

Note that by opening up S.request we can access all the parameter names via the paramNames function on Req.